by Emily Wolfteich – Senior Industry Analyst

How are we teaching AI to shape our future?

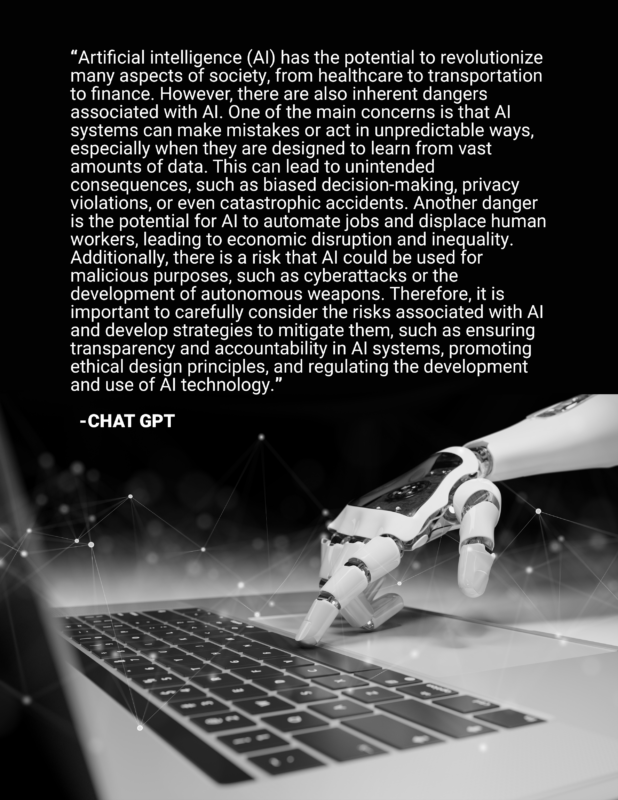

That’s what ChatGPT gave to me when I asked it to write me a paragraph about the dangers of AI. I can’t fault its reasoning or its conclusions – it is absolutely right that it can learn things incorrectly, can be used for malicious purposes (we’ve all seen a movie or two about that), and that we will need robust mitigation strategies that emphasize transparency and regulation.

Maybe I asked it the wrong question, but what interests me about this answer is that it doesn’t mention much about the integrity of the data that it learns from. The volume, yes – processing enormous amounts of sometimes conflicting data and being asked to form logical pathways and conclusions from it can sometimes lead to mistakes or unpredictability. But that’s more about the system processing mechanism. What about the data itself?

The AI Gold Rush

AI has been in the news a lot lately. From the aforementioned ChatGPT and fears that it will supplant white-collar workers to the cautionary tale of the Bing Chat that begged to be human, the prevalence of free or low-cost public access to this technology is exploding. There is also an explosion of investment in more sophisticated versions – venture capitalists are lining up to throw billions of dollars at companies and start-ups that, in some cases, have little more than a vague idea and a few resumes. The New York Times describes it as “a gold rush into AI.”

“We’re in that phase of the market where it’s, like, let 1,000 flowers bloom,” Matt Turck, a venture capitalist AI investor, told the NYT. There are relatively few AI startups, so those that exist are catnip for investors who are otherwise looking at a tech market marked by layoffs and shrinkage. But this rush to invest means that there may also be a rush to market for companies to show an ROI on their $13, $20, $25 million investments.

This type of investment is important. It’s expensive to develop the natural language models that AI relies on – some investors estimate around $500 million – and to power the computing that allows the system to learn from the data. However, a key component of this investment must be that it funds rigorous analysis to ensure data quality.

Without a rich, contextual, and accurate data fertilizer, what kind of flowers will we be growing?

Quality versus Quantity

To understate the obvious, there is a lot of data out there. Global data creation is expected to grow to more than 180 zettabytes by 2025, a number so big it sounds Seussian. However, data management, interrogation, and governance vary from organization to organization. Data siloing remains a big problem in both the private and public sectors, where information has been traditionally available and managed solely by the agency that owns it. This means, however, that AI and ML systems are learning from incomplete data sets that might lack context or complexity, which can lead to poor decision-making. Datasets can also include inherent errors, like missing, duplicated, or bad data that can lead to data bias, especially if they come from multiple sources with varying levels of standards.

It’s critical that the quality of this data is high right from the beginning. Data pools should adhere to the four pillars of data integrity: data quality, data enrichment, data integration, and location intelligence.

1 – Accuracy and Quality

More data exists now than ever before, and the growth of Internet of Things (IoT) devices, 5G, and cloud computing means that volume is exponentially expanding. With this avalanche of data, the likelihood of inaccurate or bad data also grows – and when analyzed at scale, small mistakes can become big problems.

2 – Enterprise-wide Integration

Many organizations, from government agencies to businesses, have traditionally built data management and processing systems that have worked for them, responsive to their needs and the needs of their customers or citizens. With the advent of cloud technologies and other digital modernization strategies, many leaders are working to integrate these systems better through information sharing across departments or between partners and vendors. However, these disparate methods of data gathering, processing, modeling, and systems can lead to discrepancies or inaccuracies. True data integrity requires standardization and consistent sharing across the enterprise, ensuring that data is treated the same throughout the ecosystem.

3 – Location Intelligence

This pillar of data integrity boils down to one word – context. Almost every data point in the world can be traced to some sort of location, whether geographical coordinates or IP addresses, but without a standardization of how these data points are interpreted the data can be duplicated or misunderstood. Government agencies like the Veterans Affairs, for example, might be able to use AI to pinpoint and analyze areas that urgently or more consistently need medical services. Without standardization, however – using “West Virginia” instead of a specific county, or labeling addresses differently – the system might not understand that these data points are connected or indeed the same. Location intelligence can also layer further context onto data that we already have, including patterns of movement or the environment surrounding a data point.

4 – Data Enrichment

Data is valuable, and the more context and specificity we can give to each data point, the more useful that data point becomes. For businesses and government, data enrichment builds a complete picture of who they’re trying to reach – their needs, habits, and preferences. For AI, it is crucial to build context around behaviors, environments, and situations that may lead to different conclusions. If the system is given only the information that Jack goes to school every day with a backpack, it may conclude that he is likely a student or teacher. If the system knows that his backpack is full of cleaning supplies, it may conclude that he is a janitor or cleaner. If the system knows that he only goes at 4pm, stays for less than ten minutes, has an income of a certain amount and that he also has school-age dependents listed on his taxes in this school district, it may correctly conclude that Jack is coming to pick up his child from school after his job as a cleaner. These extra layers of information are crucial to understanding the true meaning of each data point, and drawing conclusions that correctly interpret all the available information.

Fertilizing “1,000 Flowers”

Imagine if you were asked to describe trees, but were only given information about trees that grow in Florida. You could accurately and in detail describe the taxonomy, appearance, uses and origins of all the trees that fall under that dataset. But what would be missing? What would you not know? And, importantly, how would you identify what it is that you don’t know?

If you were only being asked about trees in Florida, of course, your knowledge would be more than sufficient. But without a complete data set, the conclusions miss the mark.

This is one of the biggest problems facing AI and ML developers. These systems are learning from the worldview that we are providing to them. How do we know where our own blinders are? How do we ensure that our own biases are not becoming the baseline of the decision-making of the future?

Silicon Valley’s model is “move fast and break things,” but we cannot afford to let this cavalier attitude build the language of the future. The models, programs, and applications that will come out in the next few years are likely the building blocks of what we will all use going forward, from governments to businesses to high school students. We will be using it to hire people, to communicate with each other, to make funding decisions and write opinions and triage organ recipients and determine likelihood of incarcerated people to re-offend and estimate threat levels from our adversaries. If we do not act now, to ensure that these models learn and train from quality data that is an accurate and contextual reflection of what our world looks like, we will not only replicate but enshrine inequity and discrimination.

To read additional thought leadership from Emily, connect with her on LinkedIn.